Attackers have leveraged social engineering in several high-profile hacks in recent months, with organizations like Uber, Rockstar Games, Cloudflare, Cisco, and LastPass among the most well-known targets.

Social engineering is the manipulation of a user, often through fear or doubt, to coax them into actions like revealing credentials or other sensitive information. The threat landscape is teeming with social engineering attempts across all forms of digital messaging, including email, Slack, and SMS. Moreover, spear-phishing, watering hole attacks, and spoofing are growing increasingly sophisticated.

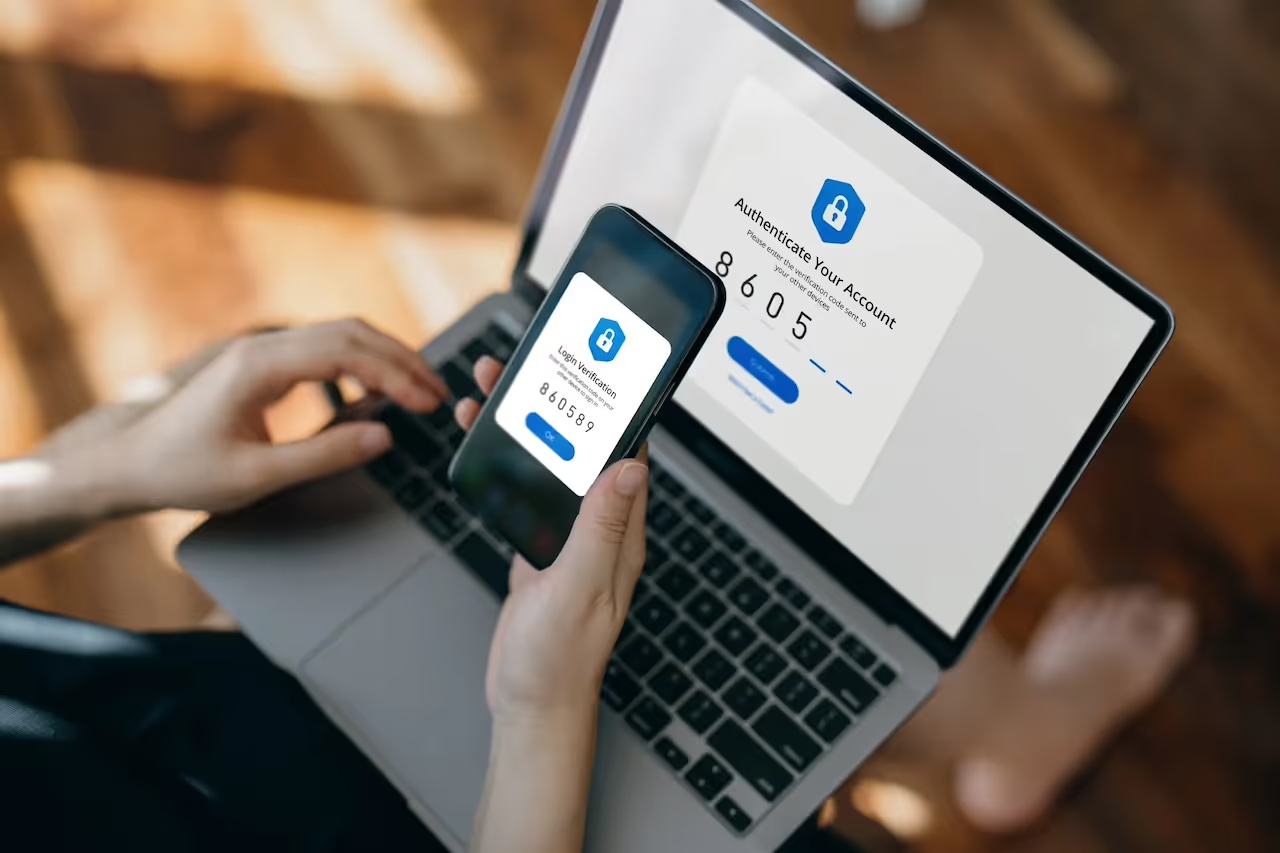

Organizations are taking numerous defensive measures in response. This includes ramping up security education efforts, as well as configuring multi-factor authentication (MFA). But while MFA strengthens security, it can still be thwarted by hackers, and security awareness training programs often yield mixed or disappointing results. Now, organizations are increasingly turning to artificial intelligence to stop cyber-attacks carried out through social engineering.

Since application-based transportation companies face distinct risks with their complex digital infrastructure, they require dynamic security solutions that adapt to evolving phishing techniques to guarantee reliable service to their customers. To that end, the Bluebird Group, the largest taxi service in Indonesia, has been using Darktrace to protect its email and cloud-based messaging since 2021.

“While we’ve pivoted and shown flexibility in the face of change, so too have the attackers,” said Sigit Djokosoetono, CEO at PT Blue Bird Tbk, a subsidiary of The Bluebird Group. “We’ve seen an uptick in attacks targeting cloud and SaaS applications, for example. Phishing emails are becoming more realistic and more frequent.”

Traditional email defenses lag behind contemporary social engineering threats because they rely on threat intelligence and collecting “deny-lists” of email domains and IP addresses already recognized as bad. But attackers can set up new domains for pennies and update infrastructure too frequently for this method to have effect.

Darktrace’s unique approach to cyber security stops these attacks. Self-Learning AI learns the who, what, when, and where of every email user’s communication patterns. This evolving and multi-dimensional understanding allows the AI to spot subtle signs of a social engineering attack, regardless of whether it is known or novel and regardless of the tactics in place.

If an employee’s credentials are used as part of a social engineering hack, Darktrace can identify the hacker’s malicious behavior. It then makes micro-decisions to neutralize the attack within seconds, stopping the offending message without disruption to the business.

“Darktrace’s AI-powered email security solution has reduced our email threats – such as spear phishing and spoofing – by 95% because it takes autonomous action to contain malicious emails before they reach a user. We can’t expect humans to spot the difference between a real and a fake anymore – it’s not sustainable,” said Djokosoetono.

More recently, social engineering has gone beyond email, and to other platforms like Slack and Microsoft Teams. This can be more difficult for security teams to manage. Darktrace takes a holistic approach to security and can be installed anywhere an organization has data. The various coverage areas are united through the Self-Learning AI, which looks at every area of the digital estate to reveal the full scope of an attack, even as the attacker traverses multiple digital environments.

“For our employees, a weight is lifted from their shoulders,” said Djokosoetono. “When it comes to something like phishing emails, training on how to spot these is important but we simply cannot put the onus on humans to spot these well-researched, targeted email attacks. With AI in place, we’re stopping these threats before humans have to deal with them."

Darktrace’s AI is always-on and works at machine-speed to protect companies, so employees can focus on producing their best work without the constant fear of malicious messaging.