AI adoption in cybersecurity: Beyond the hype

Security operations today face a paradox. On one hand, artificial intelligence (AI) promises sweeping transformation from automating routine tasks to augmenting threat detection and response. On the other hand, security leaders are under immense pressure to separate meaningful innovation from vendor hype.

To help CISOs and security teams navigate this landscape, we’ve developed the most in-depth and actionable AI Maturity Model in the industry. Built in collaboration with AI and cybersecurity experts, this framework provides a structured path to understanding, measuring, and advancing AI adoption across the security lifecycle.

Why a maturity model? And why now?

In our conversations and research with security leaders, a recurring theme has emerged:

There’s no shortage of AI solutions, but there is a shortage of clarity and understanding of AI uses cases.

In fact, Gartner estimates that “by 2027, over 40% of Agentic AI projects will be canceled due to escalating costs, unclear business value, or inadequate risk controls. Teams are experimenting, but many aren’t seeing meaningful outcomes. The need for a standardized way to evaluate progress and make informed investments has never been greater.

That’s why we created the AI Security Maturity Model, a strategic framework that:

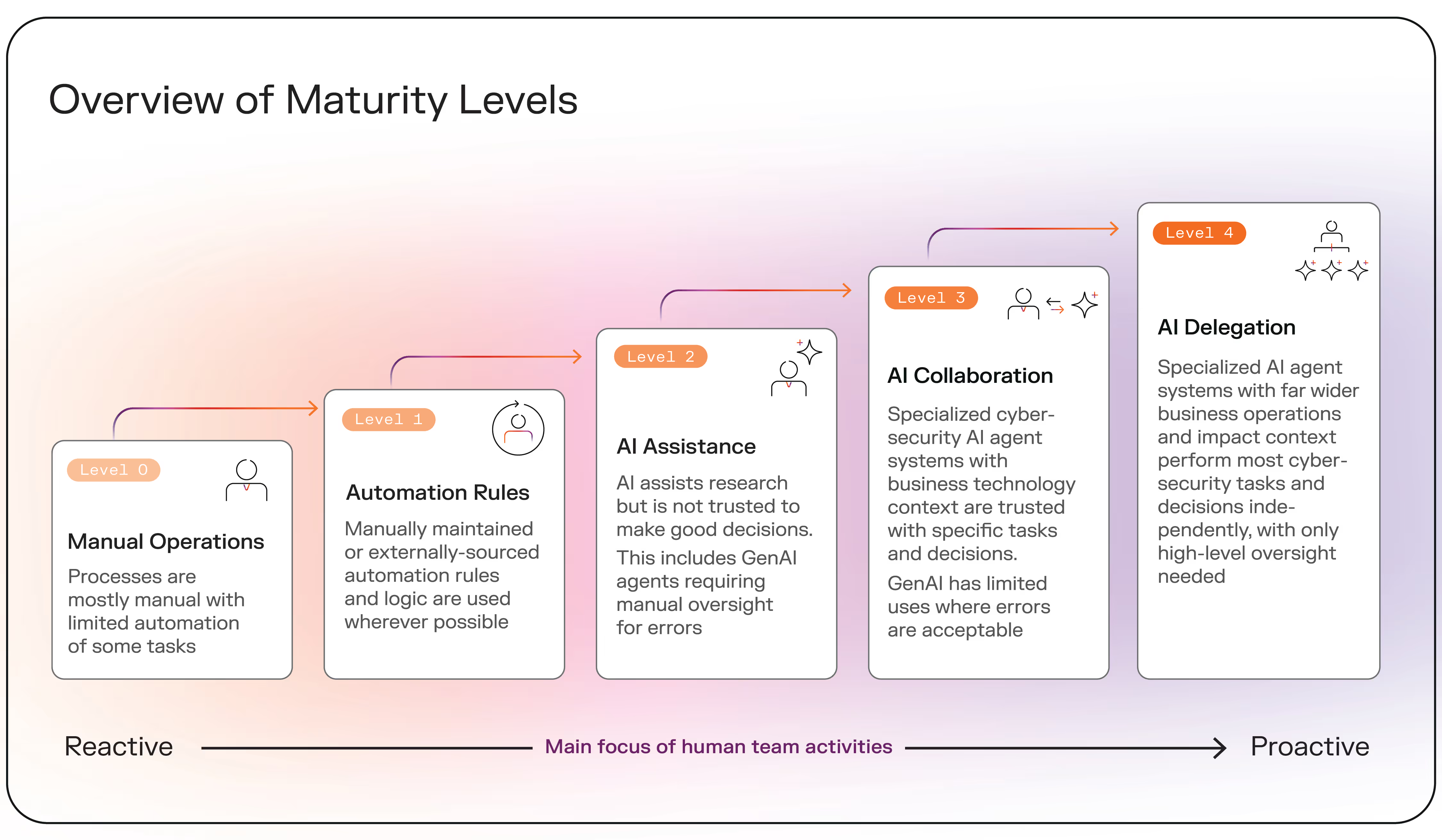

- Defines five clear levels of AI maturity, from manual processes (L0) to full AI Delegation (L4)

- Delineating the outcomes derived between Agentic GenAI and Specialized AI Agent Systems

- Applies across core functions such as risk management, threat detection, alert triage, and incident response

- Links AI maturity to real-world outcomes like reduced risk, improved efficiency, and scalable operations

[related-resource]

How is maturity assessed in this model?

The AI Maturity Model for Cybersecurity is grounded in operational insights from nearly 10,000 global deployments of Darktrace's Self-Learning AI and Cyber AI Analyst. Rather than relying on abstract theory or vendor benchmarks, the model reflects what security teams are actually doing, where AI is being adopted, how it's being used, and what outcomes it’s delivering.

This real-world foundation allows the model to offer a practical, experience-based view of AI maturity. It helps teams assess their current state and identify realistic next steps based on how organizations like theirs are evolving.

Why Darktrace?

AI has been central to Darktrace’s mission since its inception in 2013, not just as a feature, but the foundation. With over a decade of experience building and deploying AI in real-world security environments, we’ve learned where it works, where it doesn’t, and how to get the most value from it.

We've learned that modern businesses operate within a vast, interconnected ecosystem which introduces new complexities and vulnerabilities that make traditional cybersecurity approaches unsustainable. While many vendors use machine learning, not all AI tools are the same, and not all are created equal.

Darktrace’s Self-Learning AI uses a multi-layered AI approach, learning your unique organization to deliver proactive and resilient defense against today’s sophisticated threats. By strategically integrating a diverse set of AI techniques, such as machine learning, deep learning, LLMs, and natural language processing, both sequentially and hierarchically, our multi-layered AI approach provides a robust defense mechanism that is unique to your organization and adapts to the evolving threat landscape.

This model reflects that insight, helping security leaders find the right path forward for their people, processes, and tools.

Security teams today are asking big, important questions:

- What should we actually use AI for?

- How are other teams using it — and what’s working?

- What are vendors offering, and what’s just hype?

- Will AI ever replace people in the SOC?

These questions are valid, and they’re not always easy to answer. That’s why we created this model: to help security leaders move past buzzwords and build a clear, realistic plan for applying AI across the SOC.

The structure: From experimentation to autonomy

The model outlines five levels of maturity :

L0 – Manual Operations: Processes are mostly manual with limited automation of some tasks.

L1 – Automation Rules: Manually maintained or externally-sourced automation rules and logic are used wherever possible.

L2 – AI Assistance: AI assists research but is not trusted to make good decisions. This includes GenAI agents requiring manual oversight for errors.

L3 – AI Collaboration: Specialized cybersecurity AI agent systems with business technology context are trusted with specific tasks and decisions. GenAI has limited uses where errors are acceptable.

L4 – AI Delegation: Specialized AI agent systems with far wider business operations and impact context perform most cybersecurity tasks and decisions independently, with only high-level oversight needed.

Each level reflects a shift, not only in technology, but in people and processes. As AI matures, analysts evolve from executors to strategic overseers.

Strategic benefits for security leaders

The maturity model isn’t just about technology adoption it’s about aligning AI investments with measurable operational outcomes. Here’s what it enables:

SOC fatigue is real, and AI can help

Most teams still struggle with alert volume, investigation delays, and reactive processes. AI adoption is inconsistent and often siloed. When integrated well, AI can make a meaningful difference in making security teams more effective

GenAI is error prone, requiring strong human oversight

While there is a lot of hype around GenAI agentic systems, teams will need to account for inaccuracy and hallucination in Agentic GenAI systems.

AI’s real value lies in progression

The biggest gains don’t come from isolated use cases, but from integrating AI across the lifecycle, from preparation through detection to containment and recovery.

Trust and oversight are key initially but evolves in later levels

Early-stage adoption keeps humans fully in control. By L3 and L4, AI systems act independently within defined bounds, freeing humans for strategic oversight.

People’s roles shift meaningfully

As AI matures, analyst roles consolidate and elevate from labor intensive task execution to high-value decision-making, focusing on critical, high business impact activities, improving processes and AI governance.

Outcome, not hype, defines maturity

AI maturity isn’t about tech presence, it’s about measurable impact on risk reduction, response time, and operational resilience.

[related-resource]

Outcomes across the AI Security Maturity Model

The Security Organization experiences an evolution of cybersecurity outcomes as teams progress from manual operations to AI delegation. Each level represents a step-change in efficiency, accuracy, and strategic value.

L0 – Manual Operations

At this stage, analysts manually handle triage, investigation, patching, and reporting manually using basic, non-automated tools. The result is reactive, labor-intensive operations where most alerts go uninvestigated and risk management remains inconsistent.

L1 – Automation Rules

At this stage, analysts manage rule-based automation tools like SOAR and XDR, which offer some efficiency gains but still require constant tuning. Operations remain constrained by human bandwidth and predefined workflows.

L2 – AI Assistance

At this stage, AI assists with research, summarization, and triage, reducing analyst workload but requiring close oversight due to potential errors. Detection improves, but trust in autonomous decision-making remains limited.

L3 – AI Collaboration

At this stage, AI performs full investigations and recommends actions, while analysts focus on high-risk decisions and refining detection strategies. Purpose-built agentic AI systems with business context are trusted with specific tasks, improving precision and prioritization.

L4 – AI Delegation

At this stage, Specialized AI Agent Systems performs most security tasks independently at machine speed, while human teams provide high-level strategic oversight. This means the highest time and effort commitment activities by the human security team is focused on proactive activities while AI handles routine cybersecurity tasks

Specialized AI Agent Systems operate with deep business context including impact context to drive fast, effective decisions.

Join the webinar

Get a look at the minds shaping this model by joining our upcoming webinar using this link. We’ll walk through real use cases, share lessons learned from the field, and show how security teams are navigating the path to operational AI safely, strategically, and successfully.

Find your place in the AI maturity model

Get the self-guided assessment designed to help you benchmark your current maturity level, identify key gaps, and prioritize next steps.

.avif)

.jpeg)

.avif)