What is Qilin Ransomware and what's its impact?

Qilin ransomware has recently dominated discussions across the cyber security landscape following its deployment in an attack on Synnovis, a UK-based medical laboratory company. The ransomware attack ultimately affected patient services at multiple National Health Service (NHS) hospitals that rely on Synnovis diagnostic and pathology services. Qilin’s origins, however, date back further to October 2022 when the group was observed seemingly posting leaked data from its first known victim on its Dedicated Leak Site (DLS) under the name Agenda[1].

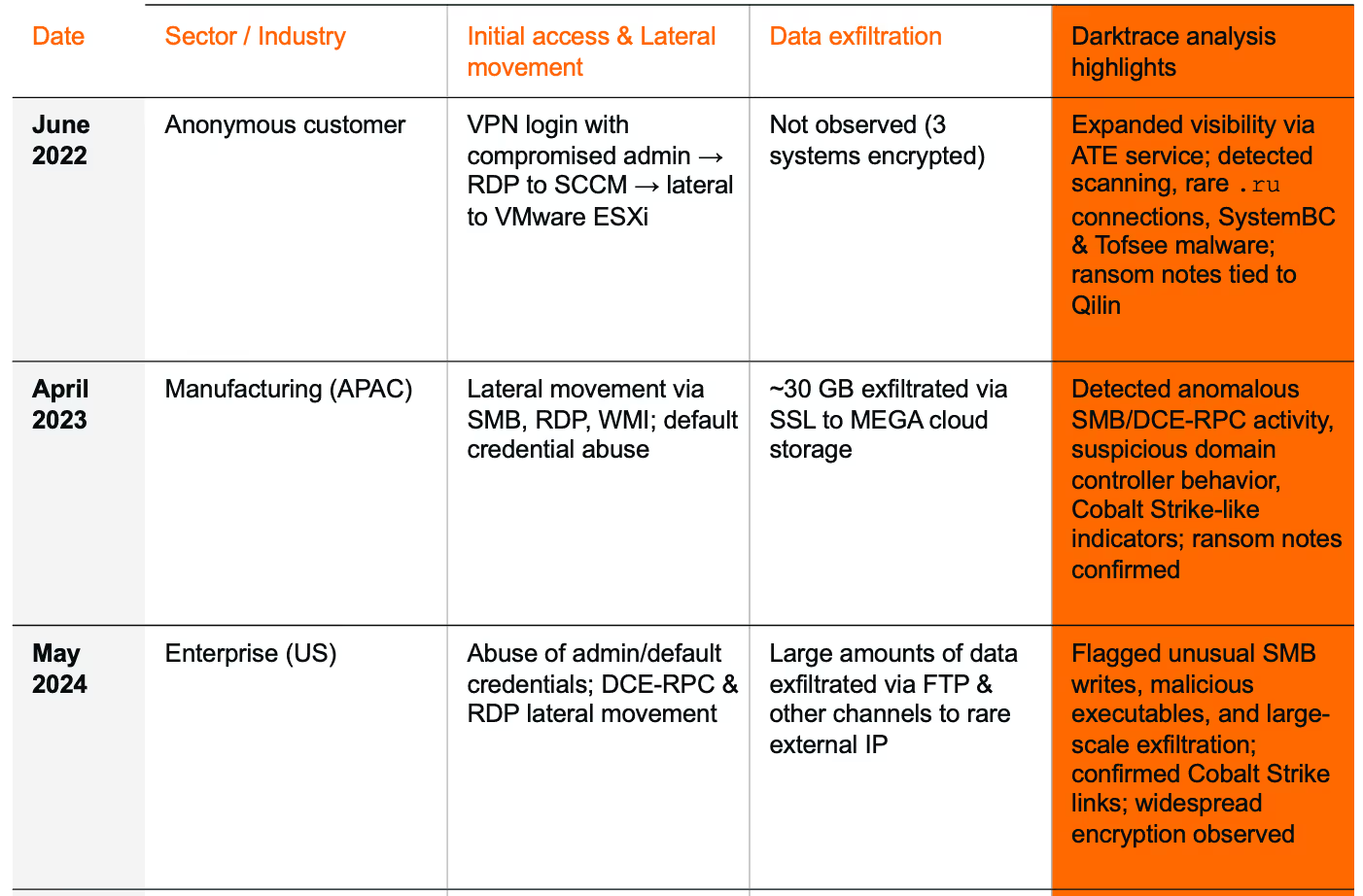

The Darktrace Threat Research team investigated network artifacts related to Qilin and identified three probable cases of the ransomware across the Darktrace customer base between June 2022 and May 2024.

How Qilin Ransowmare operates as RaaS

Qilin operates as a Ransomware-as-a-Service (RaaS) that employs double extortion tactics, whereby harvested data is exfiltrated and threatened of publication on the group's DLS, which is hosted on Tor. Qilin ransomware has samples written in both the Golang and Rust programming languages, making it compilable with various operating systems, and is highly customizable.

Techniques Qilin Ransomware uses to avoid detection

When building Qilin ransomware variants to be used on their target(s), affiliates can configure settings such as:

- Encryption modes (skip-step, percent, or speed)

- File extensions, directories, or processes to exclude

- Unique company IDs used as extensions on encrypted files

- Services or processes to terminate during execution [1] [2]

Trend Micro analysts, who were the first to discover Qilin samples in August 2022, when the name "Agenda" was still used in ransom notes, found that each analyzed sample was customized for the intended victims and that "unique company IDs were used as extensions of encrypted files" [3]. This information is configurable from within the Qilin's affiliate panel's 'Targets' section, shown below.

Qilin's affiliate panel and branding

The panel's background image features the eponym Chinese legendary chimerical creature Qilin (pronounced “Ke Lin”). Despite this Chinese mythology reference, Russian language was observed being used by a Qilin operator in an underground forum post aimed at hiring affiliates and advertising their RaaS operation[2].

Qilin’s affiliate payment model

Qilin's RaaS program purportedly has an attractive affiliates' payment structure,

- Affiliates earn 80% of ransom payments under USD 3 million

- Affiliates earn 85% of ransom payments above USD 3 million [2]

Publication of stolen data and ransom payment negotiations are purportedly handled by Qilin operators. Qilin affiliates have been known to target companies located around the world and within a variety of industries, including critical sectors such as healthcare and energy.

Qilin target industries and victims

As Qilin is a RaaS operation, the choice of targets does not necessarily reflect Qilin operators' intentions, but rather that of its affiliates.

Similarly, the tactics, techniques, procedures (TTPs) and indicators of compromise (IoC) identified by Darktrace are associated with the given affiliate deploying Qilin ransomware for their own purpose, rather than TTPs and IoCs of the Qilin group. Likewise, initial vectors of infection may vary from affiliate to affiliate.

Previous studies show that initial access to networks were gained via spear phishing emails or by leveraging exposed applications and interfaces.

Differences have been observed in terms of data exfiltration and potential C2 external endpoints, suggesting the below investigations are not all related to the same group or actor(s).

[related-resource]

Darktrace’s threat research investigation

June 2022: Qilin ransomware attack exploiting VPN and SCCM servers

Key findings

- Initial access: VPN and compromised admin account

- Lateral movement: SCCM and VMware ESXi hosts

- Malware observed: SystemBC, Tofsee

- Ransom notes: Linked to Qilin naming conventions

- Darktrace visibility: Analysts worked with customer via Ask the Expert (ATE) to expand coverage, revealing unusual scanning, rare external connections, and malware indicators tied to Qilin

Full story

Darktrace first detected an instance of Qilin ransomware back in June 2022, when an attacker was observed successfully accessing a customer’s Virtual Private Network (VPN) and compromising an administrative account, before using RDP to gain access to the customer’s Microsoft System Center Configuration Manager (SCCM) server.

From there, an attack against the customer's VMware ESXi hosts was launched. Fortunately, a reboot of their virtual machines (VM) caught the attention of the security team who further uncovered that custom profiles had been created and remote scripts executed to change root passwords on their VM hosts. Three accounts were found to have been compromised and three systems encrypted by ransomware.

Unfortunately, Darktrace was not configured to monitor the affected subnets at the time of the attack. Despite this, the customer was able to work directly with Darktrace analysts via the Ask the Expert (ATE) service to add the subnets in question to Darktrace’s visibility, allowing it to monitor for any further unusual behavior.

Once visibility over the compromised SCCM server was established, Darktrace observed:

- A series of unusual network scanning activities

- The use of Kali (a Linux distribution designed for digital forensics and penetration testing).

- Connections to multiple rare external hosts. Many of which were using the “[.]ru” Top Level Domain (TLD).

One of the external destinations the server was attempting to connect was found to be related to SystemBC, a malware that turns infected hosts into SOCKS5 proxy bots and provides command-and-control (C2) functionality.

Additionally, the server was observed making external connections over ports 993 and 143 (typically associated with the use of the Interactive Message Access Protocol (IMAP) to multiple rare external endpoints. This was likely due to the presence of Tofsee malware on the device.

After the compromise had been contained, Darktrace identified several ransom notes following the naming convention “README-RECOVER-<extension/company_id>.txt”” on the network. This naming convention, as well as the similar “<company_id>-RECOVER-README.txt” have been referenced by open-source intelligence (OSINT) providers as associated with Qilin ransom notes[5] [6] [7].

April 2023: Manufacturing sector breach with large-scale exfiltration

Key findings

- Initial access & movement: Extensive scanning and lateral movement via SMB, RDP, and WMI

- Credential abuse: Use of default credentials (admin, administrator)

- Malware/Indicators: Evidence of Cobalt Strike; suspicious WebDAV user agent and JA3 fingerprint

- Data exfiltration: ~30 GB stolen via SSL to MEGA cloud storage

- Darktrace analysis: Detected anomalous SMB and DCE-RPC traffic from domain controller, high-volume RDP activity, and rare external connectivity to IPs tied to command-and-control (C2). Confirmed ransom notes followed Qilin naming conventions.

Full story

The next case of Qilin ransomware observed by Darktrace took place in April 2023 on the network of a customer in the manufacturing sector in APAC. Unfortunately for the customer in this instance, Darktrace's Autonomous Response was not active on their environment and no autonomous actions were taken to contain the compromise.

Over the course of two days, Darktrace identified a wide range of malicious activity ranging from extensive initial scanning and lateral movement attempts to the writing of ransom notes that followed the aforementioned naming convention (i.e., “README-RECOVER-<extension/company_id>.txt”).

Darktrace observed two affected devices attempting to move laterally through the SMB, DCE-RPC and RDP network protocols. Default credentials (e.g., UserName, admin, administrator) were also observed in the large volumes of SMB sessions initiated by these devices. One of the target devices of these SMB connections was a domain controller, which was subsequently seen making suspicious WMI requests to multiple devices over DCE-RPC and enumerating SMB shares by binding to the ‘server service’ (srvsvc) named pipe to a high number of internal devices within a short time frame. The domain controller was further detected establishing an anomalously high number of connections to several internal devices, notably using the RDP administrative protocol via a default admin cookie.

Repeated connections over the HTTP and SSL protocol to multiple newly observed IPs located in the 184.168.123.0/24 range were observed, indicating C2 connectivity. WebDAV user agent and a JA3 fingerprint potentially associated with Cobalt Strike were notably observed in these connections. A few hours later, Darktrace detected additional suspicious external connections, this time to IPs associated with the MEGA cloud storage solution. Storage solutions such as MEGA are often abused by attackers to host stolen data post exfiltration. In this case, the endpoints were all rare for the network, suggesting this solution was not commonly used by legitimate users. Around 30 GB of data was exfiltrated over the SSL protocol.

Darktrace did not observe any encryption-related activity on this customer’s network, suggesting that encryption may have taken place locally or within network segments not monitored by Darktrace.

May 2024: US enterprise compromise

Key findings

- Initial access & movement: Abuse of administrative and default credentials; lateral movement via DCE-RPC and RDP

- Malware/Indicators: Suspicious executables (‘a157496.exe’, ‘83b87b2.exe’); abuse of RPC service

LSM_API_service - Data exfiltration: Large amount of data exfiltrated via FTP and other channels to rare external endpoint (194.165.16[.]13)

- C2 communications: HTTP/SSL traffic linked to Cobalt Strike, including PowerShell request for

sihost64.dll - Darktrace analysis: Flagged unusual SMB writes, malicious file transfers, and large-scale exfiltration as highly anomalous. Confirmed widespread encryption activity targeting numerous devices and shares.

Full story

The most recent instance of Qilin observed by Darktrace took place in May 2024 and involved a customer in the US.

In this case, Darktrace initially detected affected devices using unusual administrative and default credentials. Then Darktrace observed additional Internal systems conducting abnormal activity such as:

- Making extensive suspicious DCE-RPC requests to a range of internal locations

- Performing network scanning

- Making unusual internal RDP connections

- And transferring suspicious executable files like 'a157496.exe' and '83b87b2.exe'.

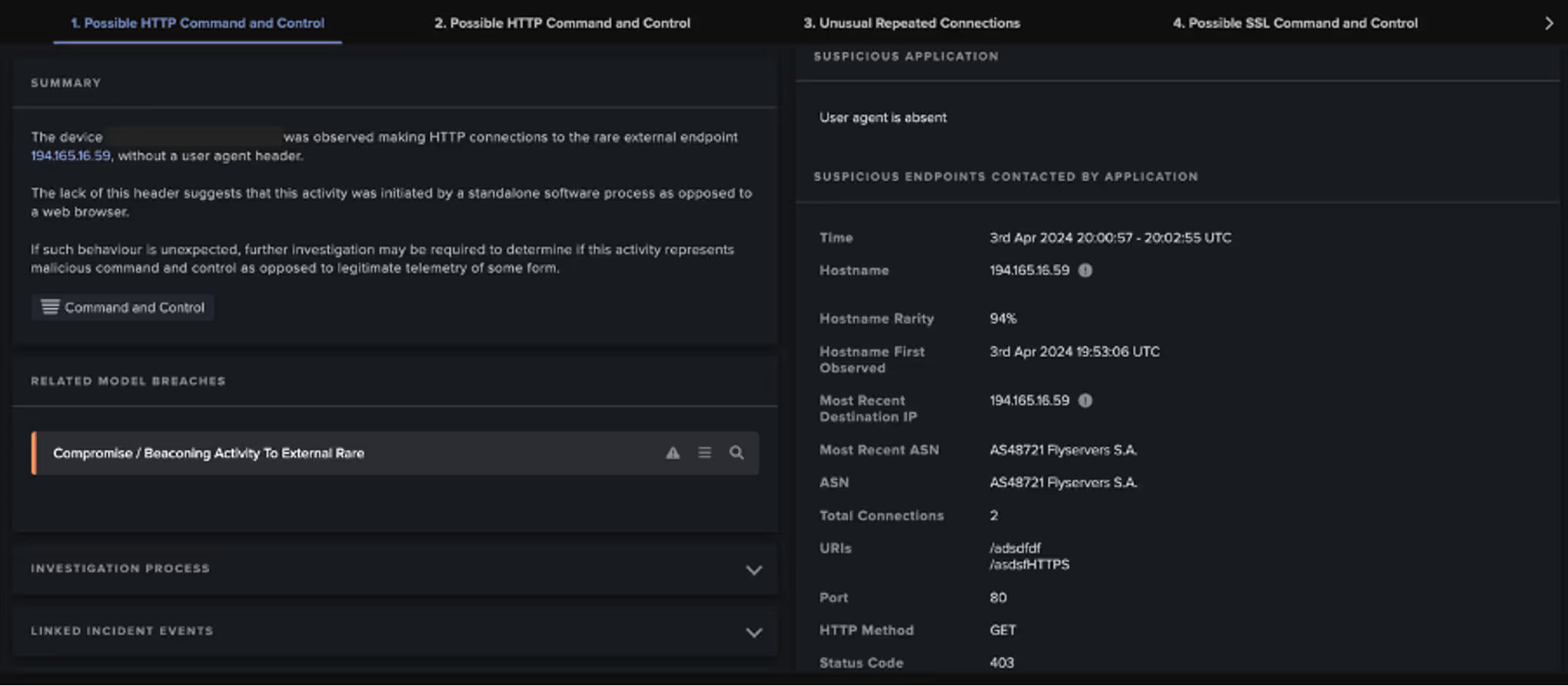

SMB writes of the file "LSM_API_service" were also observed, activity which was considered 100% unusual by Darktrace; this is an RPC service that can be abused to enumerate logged-in users and steal their tokens. Various repeated connections likely representative of C2 communications were detected via both HTTP and SSL to rare external endpoints linked in OSINT to Cobalt Strike use. During these connections, HTTP GET requests for the following URIs were observed:

/asdffHTTPS

/asdfgdf

/asdfgHTTP

/download/sihost64.dll

Notably, this included a GET request a DLL file named "sihost64.dll" from a domain controller using PowerShell.

Over 102 GB of data may have been transferred to another previously unseen endpoint, 194.165.16[.]13, via the unencrypted File Transfer Protocol (FTP). Additionally, many non-FTP connections to the endpoint could be observed, over which more than 783 GB of data was exfiltrated. Regarding file encryption activity, a wide range of destination devices and shares were targeted.

During investigations, Darktrace’s Threat Research team identified an additional customer, also based in the United States, where similar data exfiltration activity was observed in April 2024. Although no indications of ransomware encryption were detected on the network, multiple similarities were observed with the case discussed just prior. Notably, the same exfiltration IP and protocol (194.165.16[.]13 and FTP, respectively) were identified in both cases. Additional HTTP connectivity was further observed to another IP using a self-signed certificate (i.e., CN=ne[.]com,OU=key operations,O=1000,L=,ST=,C=KM) located within the same ASN (i.e., AS48721 Flyservers S.A.). Some of the URIs seen in the GET requests made to this endpoint were the same as identified in that same previous case.

Information regarding another device also making repeated connections to the same IP was described in the second event of the same Cyber AI Analyst incident. Following this C2 connectivity, network scanning was observed from a compromised domain controller, followed by additional reconnaissance and lateral movement over the DCE-RPC and SMB protocols. Darktrace again observed SMB writes of the file "LSM_API_service", as in the previous case, activity which was also considered 100% unusual for the network. These similarities suggest the same actor or affiliate may have been responsible for activity observed, even though no encryption was observed in the latter case.

According to researchers at Microsoft, some of the IoCs observed on both affected accounts are associated with Pistachio Tempest, a threat actor reportedly associated with ransomware distribution. The Microsoft threat actor naming convention uses the term "tempest" to reference criminal organizations with motivations of financial gain that are not associated with high confidence to a known non-nation state or commercial entity. While Pistachio Tempest’s TTPs have changed over time, their key elements still involve ransomware, exfiltration, and extortion. Once they've gained access to an environment, Pistachio Tempest typically utilizes additional tools to complement their use of Cobalt Strike; this includes the use of the SystemBC RAT and the SliverC2 framework, respectively. It has also been reported that Pistacho Tempest has experimented with various RaaS offerings, which recently included Qilin ransomware[4].

Conclusion

Qilin is a RaaS group that has gained notoriety recently due to high-profile attacks perpetrated by its affiliates. Despite this, the group likely includes affiliates and actors who were previously associated with other ransomware groups. These individuals bring their own modus operandi and utilize both known and novel TTPs and IoCs that differ from one attack to another.

Darktrace’s anomaly-based technology is inherently threat-agnostic, treating all RaaS variants equally regardless of the attackers’ tools and infrastructure. Deviations from a device’s ‘learned’ pattern of behavior during an attack enable Darktrace to detect and contain potentially disruptive ransomware attacks.

[related-resource]

Credit to: Alexandra Sentenac, Emma Foulger, Justin Torres, Min Kim, Signe Zaharka for their contributions.

References

[1] https://www.sentinelone.com/anthology/agenda-qilin/

[2] https://www.group-ib.com/blog/qilin-ransomware/

[3] https://www.trendmicro.com/en_us/research/22/h/new-golang-ransomware-agenda-customizes-attacks.html

[4] https://www.microsoft.com/en-us/security/security-insider/pistachio-tempest

[5] https://www.trendmicro.com/en_us/research/22/h/new-golang-ransomware-agenda-customizes-attacks.html

[6] https://www.bleepingcomputer.com/forums/t/790240/agenda-qilin-ransomware-id-random-10-char;-recover-readmetxt-support/

[7] https://github.com/threatlabz/ransomware_notes/tree/main/qilin

Darktrace Model Detections

Internal Reconnaissance

Device / Suspicious SMB Scanning Activity

Device / Network Scan

Device / RDP Scan

Device / ICMP Address Scan

Device / Suspicious Network Scan Activity

Anomalous Connection / SMB Enumeration

Device / New or Uncommon WMI Activity

Device / Attack and Recon Tools

Lateral Movement

Device / SMB Session Brute Force (Admin)

Device / Large Number of Model Breaches from Critical Network Device

Device / Multiple Lateral Movement Model Breaches

Anomalous Connection / Unusual Admin RDP Session

Device / SMB Lateral Movement

Compliance / SMB Drive Write

Anomalous Connection / New or Uncommon Service Control

Anomalous Connection / Anomalous DRSGetNCChanges Operation

Anomalous Server Activity / Domain Controller Initiated to Client

User / New Admin Credentials on Client

C2 Communication

Anomalous Server Activity / Outgoing from Server

Anomalous Connection / Multiple Connections to New External TCP Port

Anomalous Connection / Anomalous SSL without SNI to New External

Anomalous Connection / Rare External SSL Self-Signed

Device / Increased External Connectivity

Unusual Activity / Unusual External Activity

Compromise / New or Repeated to Unusual SSL Port

Anomalous Connection / Multiple Failed Connections to Rare Endpoint

Device / Suspicious Domain

Device / Increased External Connectivity

Compromise / Sustained SSL or HTTP Increase

Compromise / Botnet C2 Behaviour

Anomalous Connection / POST to PHP on New External Host

Anomalous Connection / Multiple HTTP POSTs to Rare Hostname

Anomalous File / EXE from Rare External Location

Exfiltration

Unusual Activity / Enhanced Unusual External Data Transfer

Anomalous Connection / Data Sent to Rare Domain

Unusual Activity / Unusual External Data Transfer

Anomalous Connection / Uncommon 1 GiB Outbound

Unusual Activity / Unusual External Data to New Endpoint

Compliance / FTP / Unusual Outbound FTP

File Encryption

Compromise / Ransomware / Suspicious SMB Activity

Anomalous Connection / Sustained MIME Type Conversion

Anomalous File / Internal / Additional Extension Appended to SMB File

Compromise / Ransomware / Possible Ransom Note Write

Compromise / Ransomware / Possible Ransom Note Read

Anomalous Connection / Suspicious Read Write Ratio

IoC List

IoC – Type – Description + Confidence

93.115.25[.]139 IP C2 Server, likely associated with SystemBC

194.165.16[.]13 IP Probable Exfiltration Server

91.238.181[.]230 IP C2 Server, likely associated with Cobalt Strike

ikea0[.]com Hostname C2 Server, likely associated with Cobalt Strike

lebondogicoin[.]com Hostname C2 Server, likely associated with Cobalt Strike

184.168.123[.]220 IP Possible C2 Infrastructure

184.168.123[.]219 IP Possible C2 Infrastructure

184.168.123[.]236 IP Possible C2 Infrastructure

184.168.123[.]241 IP Possible C2 Infrastructure

184.168.123[.]247 IP Possible C2 Infrastructure

184.168.123[.]251 IP Possible C2 Infrastructure

184.168.123[.]252 IP Possible C2 Infrastructure

184.168.123[.]229 IP Possible C2 Infrastructure

184.168.123[.]246 IP Possible C2 Infrastructure

184.168.123[.]230 IP Possible C2 Infrastructure

gfs440n010.userstorage.me ga.co[.]nz Hostname Possible Exfiltration Server. Not inherently malicious; associated with MEGA file storage.

gfs440n010.userstorage.me ga.co[.]nz Hostname Possible Exfiltration Server. Not inherently malicious; associated with MEGA file storage.

Get the latest insights on emerging cyber threats

This report explores the latest trends shaping the cybersecurity landscape and what defenders need to know in 2025