Introduction

On August 26, 2025, Google Threat intelligence Group released a report detailing a widespread data theft campaign targeting the sales automation platform Salesloft, via compromised OAuth tokens used by the third-party Drift AI chat agent [1][2]. The attack has been attributed to the threat actor UNC6395 by Google Threat Intelligence and Mandiant [1].

The attack is believed to have begun in early August 2025 and continued through until mid-August 2025 [1], with the threat actor exporting significant volumes of data from multiple Salesforce instances [1]. Then sifting through this data for anything that could be used to compromise the victim’s environments such as access keys, tokens or passwords. This had led to Google Threat Intelligence Group assessing that the primary intent of the threat actor is credential harvesting, and later reporting that it was aware of in excess of 700 potentially impacted organizations [3].

Salesloft previously stated that, based on currently available data, customers that do not integrate with Salesforce are unaffected by this campaign [2]. However, on August 28, Google Threat Intelligence Group announced that “Based on new information identified by GTIG, the scope of this compromise is not exclusive to the Salesforce integration with Salesloft Drift and impacts other integrations” [2]. Google Threat Intelligence has since advised that any and all authentication tokens stored in or connected to the Drift platform be treated as potentially compromised [1].

This campaign demonstrates how attackers are increasingly exploiting trusted Software-as-a-Service (SaaS) integrations as a pathway into enterprise environment.

By abusing these integrations, threat actors were able to exfiltrate sensitive business data at scale, bypassing traditional security controls. Rather than relying on malware or obvious intrusion techniques, the adversaries leveraged legitimate credentials and API traffic that resembled legitimate Salesforce activity to achieve their goals. This type of activity is far harder to detect with conventional security tools, since it blends in with the daily noise of business operations.

The incident underscores the escalating significance of autonomous coverage within SaaS and third-party ecosystems. As businesses increasingly depend on interconnected platforms, visibility gaps become evident that cannot be managed by conventional perimeter and endpoint defenses.

By developing a behavioral comprehension of each organization's distinct use of cloud services, anomalies can be detected, such as logins from unexpected locations, unusually high volumes of API requests, or unusual document activity. These indications serve as an early alert system, even when intruders use legitimate tokens or accounts, enabling security teams to step in before extensive data exfiltration takes place

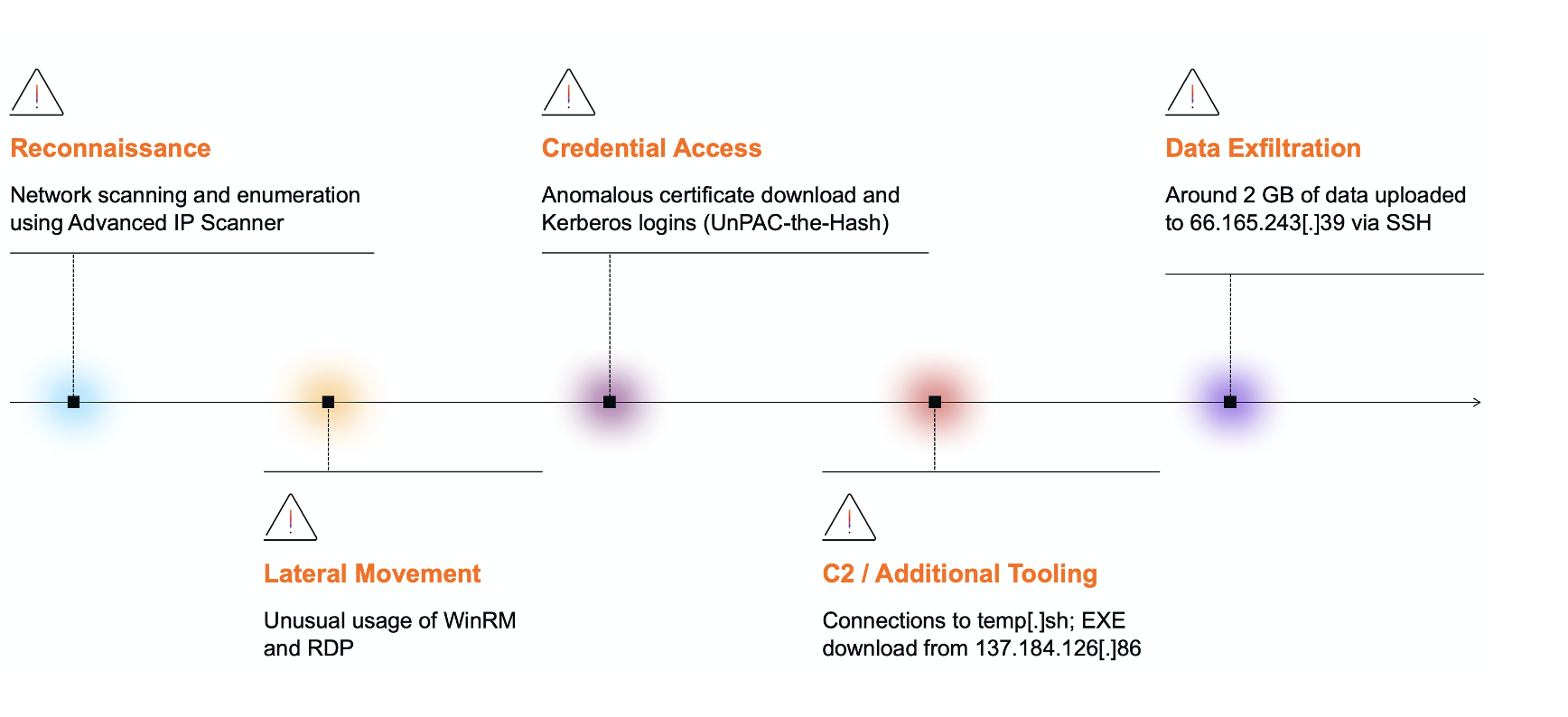

What happened?

The campaign is believed to have started on August 8, 2025, with malicious activity continuing until at least August 18. The threat actor, tracked as UNC6395, gained access via compromised OAuth tokens associated with Salesloft Drift integrations into Salesforce [1]. Once tokens were obtained, the attackers were able to issue large volumes of Salesforce API requests, exfiltrating sensitive customer and business data.

Initial Intrusion

The attackers first established access by abusing OAuth and refresh tokens from the Drift integration. These tokens gave them persistent access into Salesforce environments without requiring further authentication [1]. To expand their foothold, the threat actor also made use of TruffleHog [4], an open-source secrets scanner, to hunt for additional exposed credentials. Logs later revealed anomalous IAM updates, including unusual UpdateAccessKey activity, which suggested attempts to ensure long-term persistence and control within compromised accounts.

Internal Reconnaissance & Data Exfiltration

Once inside, the adversaries began exploring the Salesforce environments. They ran queries designed to pull sensitive data fields, focusing on objects such as Cases, Accounts, Users, and Opportunities [1]. At the same time, the attackers sifted through this information to identify secrets that could enable access to other systems, including AWS keys and Snowflake credentials [4]. This phase demonstrated the opportunistic nature of the campaign, with the actors looking for any data that could be repurposed for further compromise.

Lateral Movement

Salesloft and Mandiant investigations revealed that the threat actor also created at least one new user account in early September. Although follow-up activity linked to this account was limited, the creation itself suggested a persistence mechanism designed to survive remediation efforts. By maintaining a separate identity, the attackers ensured they could regain access even if their stolen OAuth tokens were revoked.

Accomplishing the mission

The data taken from Salesforce environments included valuable business records, which attackers used to harvest credentials and identify high-value targets. According to Mandiant, once the data was exfiltrated, the actors actively sifted through it to locate sensitive information that could be leveraged in future intrusions [1]. In response, Salesforce and Salesloft revoked OAuth tokens associated with Drift integrations on August 20 [1], a containment measure aimed at cutting off the attackers’ primary access channel and preventing further abuse.

How did the attack bypass the rest of the security stack?

The campaign effectively bypassed security measures by using legitimate credentials and OAuth tokens through the Salesloft Drift integration. This rendered traditional security defenses like endpoint protection and firewalls ineffective, as the activity appeared non-malicious [1]. The attackers blended into normal operations by using common user agents and making queries through the Salesforce API, which made their activity resemble legitimate integrations and scripts. This allowed them to operate undetected in the SaaS environment, exploiting the trust in third-party connections and highlighting the limitations of traditional detection controls.

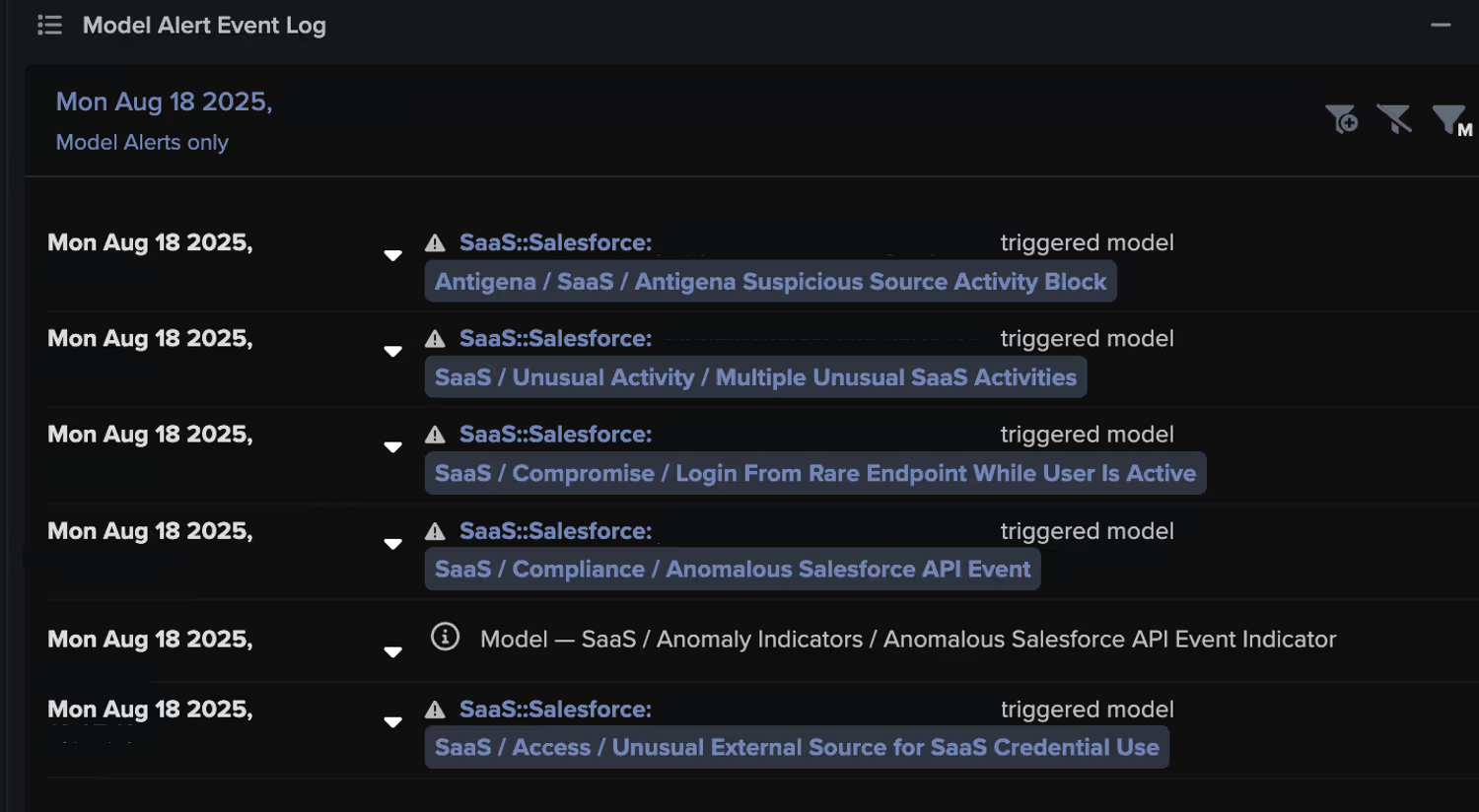

Darktrace Coverage

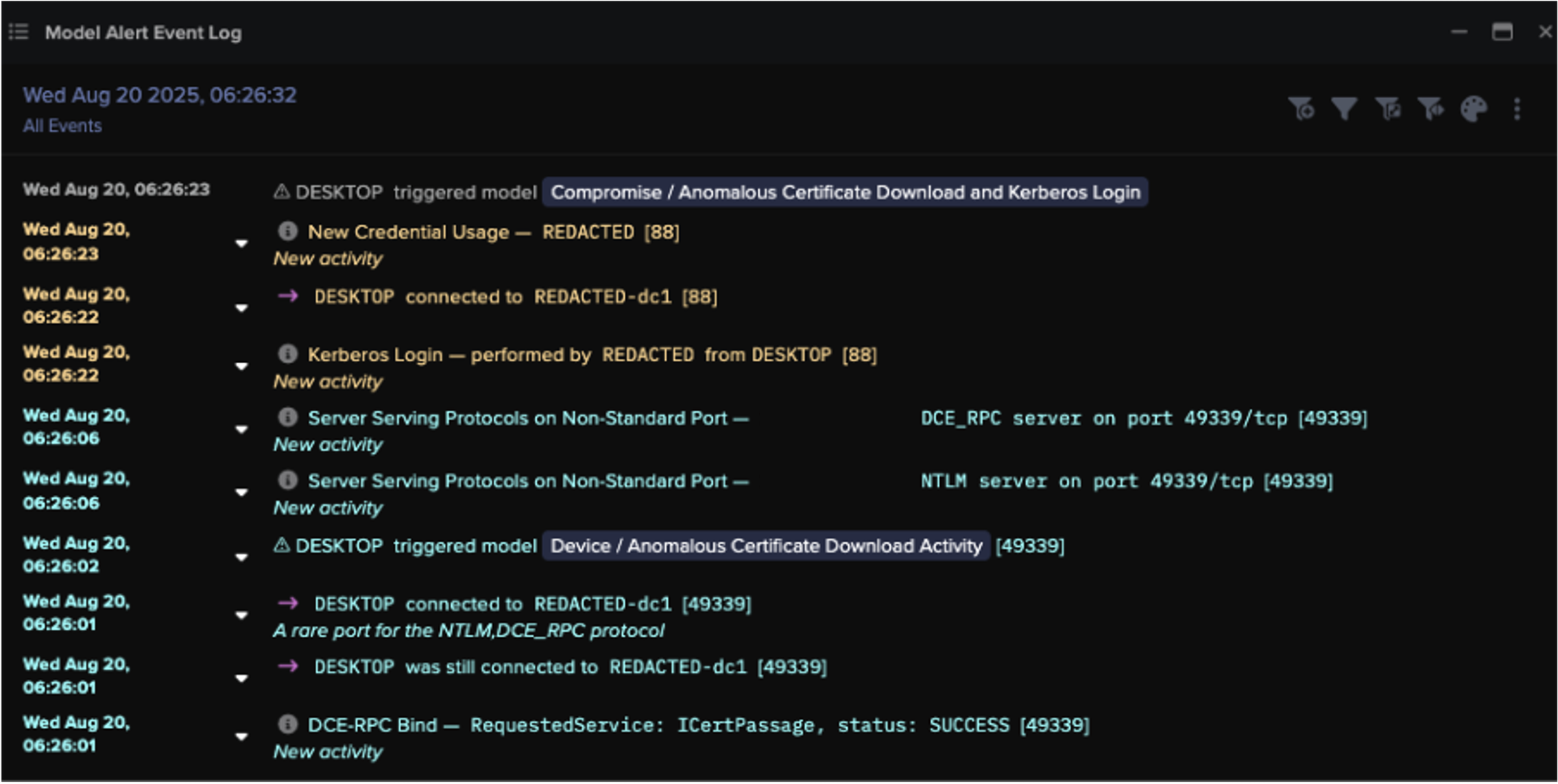

Anomalous activities have been identified across multiple Darktrace deployments that appear associated with this campaign. This included two cases on customers based within the United States who had a Salesforce integration, where the pattern of activities was notably similar.

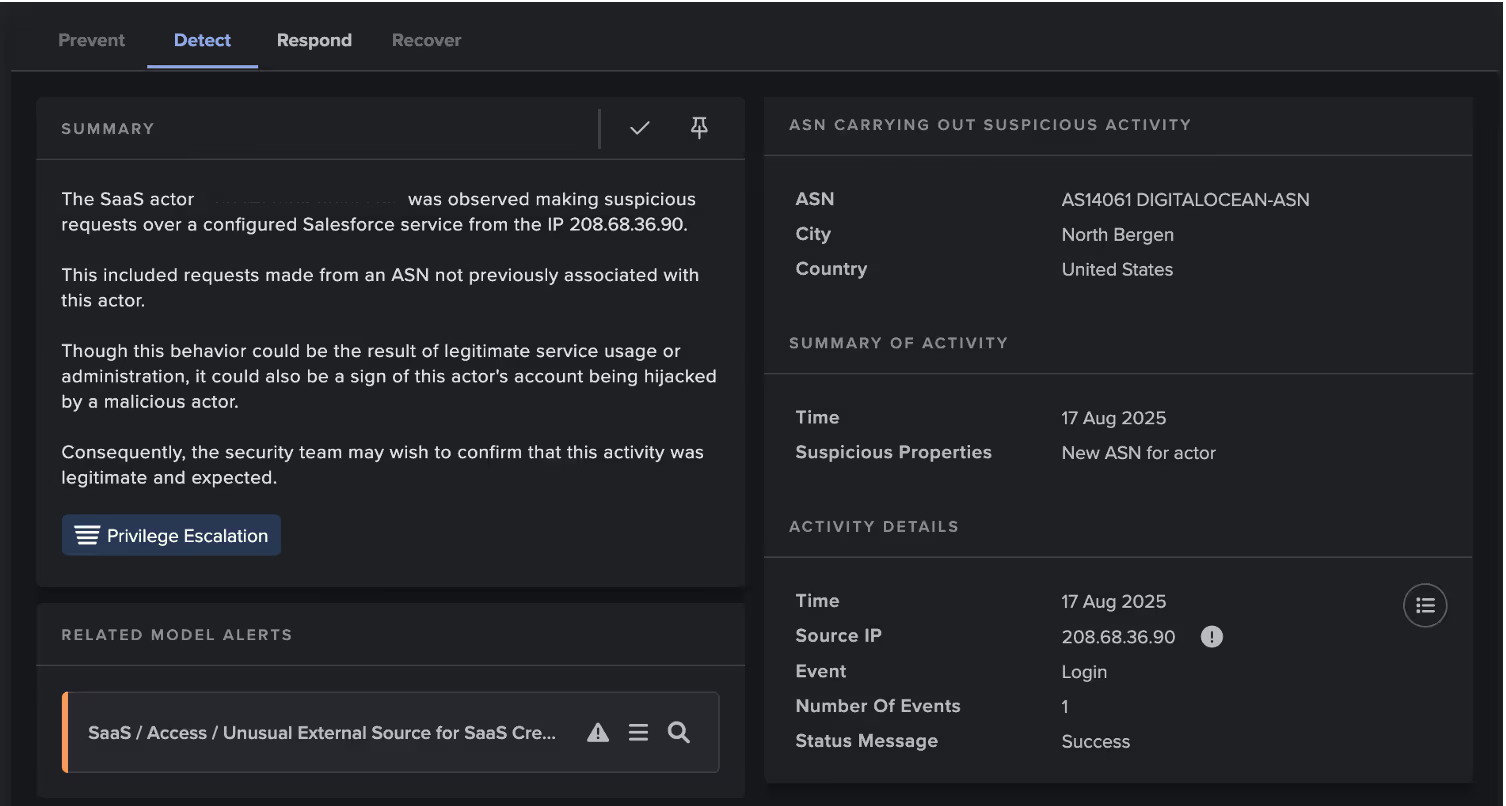

On August 17, Darktrace observed an account belonging to one of these customers logging in from the rare endpoint 208.68.36[.]90, while the user was seen active from another location. This IP is a known indicator of compromise (IoC) reported by open-source intelligence (OSINT) for the campaign [2].

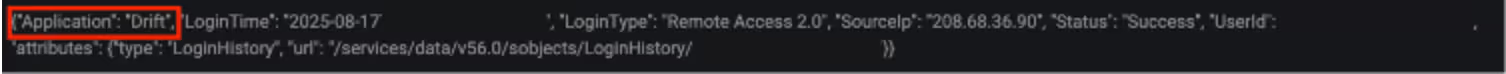

The login event was associated with the application Drift, further connecting the events to this campaign.

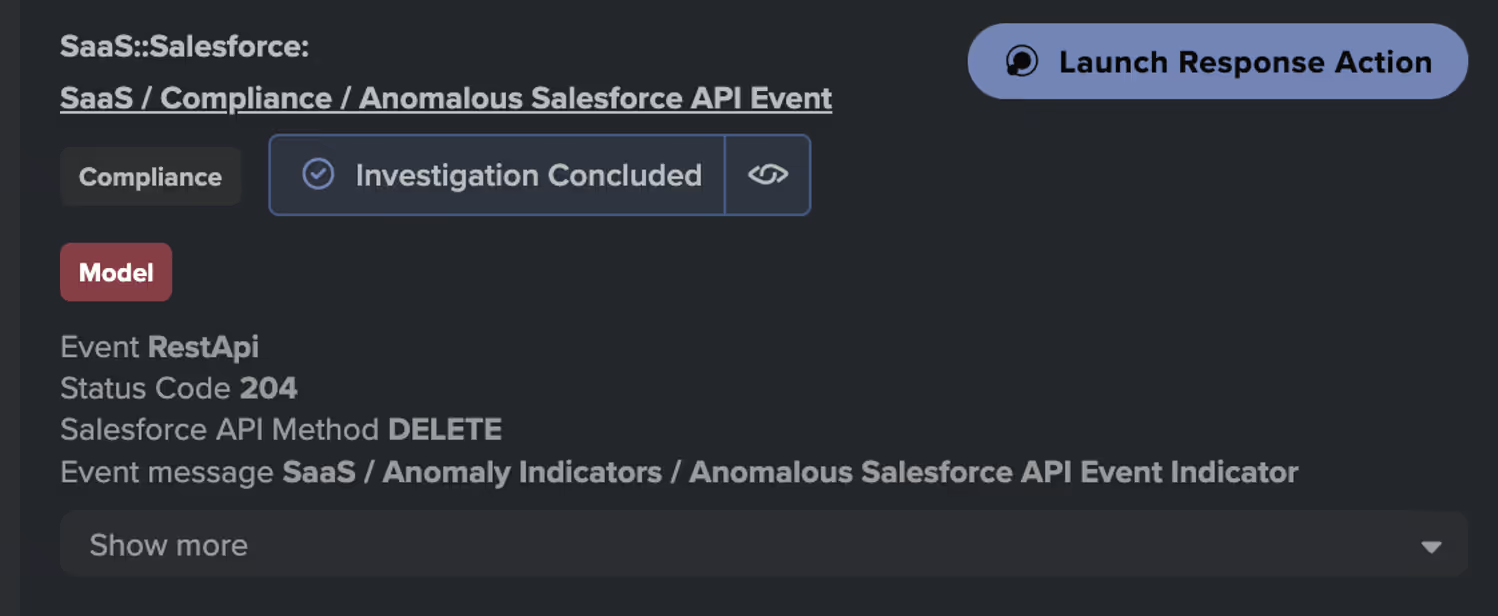

Following the login, the actor initiated a high volume of Salesforce API requests using methods such as GET, POST, and DELETE. The GET requests targeted endpoints like /services/data/v57.0/query and /services/data/v57.0/sobjects/Case/describe, where the former is used to retrieve records based on a specific criterion, while the latter provides metadata for the Case object, including field names and data types [5,6].

Subsequently, a POST request to /services/data/v57.0/jobs/query was observed, likely to initiate a Bulk API query job for extracting large volumes of data from the Ingest Job endpoint [7,8].

Finally, a DELETE request to remove an ingestion job batch, possibly an attempt to obscure traces of prior data access or manipulation.

A case on another US-based customer took place a day later, on August 18. This again began with an account logging in from the rare IP 208.68.36[.]90 involving the application Drift. This was followed by Salesforce GET requests targeting the same endpoints as seen in the previous case, and then a POST to the Ingest Job endpoint and finally a DELETE request, all occurring within one minute of the initial suspicious login.

The chain of anomalous behaviors, including a suspicious login and delete request, resulted in Darktrace’s Autonomous Response capability suggesting a ‘Disable user’ action. However, the customer’s deployment configuration required manual confirmation for the action to take effect.

Conclusion

In conclusion, this incident underscores the escalating risks of SaaS supply chain attacks, where third-party integrations can become avenues for attacks. It demonstrates how adversaries can exploit legitimate OAuth tokens and API traffic to circumvent traditional defenses. This emphasizes the necessity for constant monitoring of SaaS and cloud activity, beyond just endpoints and networks, while also reinforcing the significance of applying least privilege access and routinely reviewing OAuth permissions in cloud environments. Furthermore, it provides a wider perspective into the evolution of the threat landscape, shifting towards credential and token abuse as opposed to malware-driven compromise.

Credit to Emma Foulger (Global Threat Research Operations Lead), Calum Hall (Technical Content Researcher), Signe Zaharka (Principal Cyber Analyst), Min Kim (Senior Cyber Analyst), Nahisha Nobregas (Senior Cyber Analyst), Priya Thapa (Cyber Analyst)

Appendices

Darktrace Model Detections

· SaaS / Access / Unusual External Source for SaaS Credential Use

· SaaS / Compromise / Login From Rare Endpoint While User Is Active

· SaaS / Compliance / Anomalous Salesforce API Event

· SaaS / Unusual Activity / Multiple Unusual SaaS Activities

· Antigena / SaaS / Antigena Unusual Activity Block

· Antigena / SaaS / Antigena Suspicious Source Activity Block

Customers should consider integrating Salesforce with Darktrace where possible. These integrations allow better visibility and correlation to spot unusual behavior and possible threats.

IoC List

(IoC – Type)

· 208.68.36[.]90 – IP Address

References

2. https://trust.salesloft.com/?uid=Drift+Security+Update%3ASalesforce+Integrations+%283%3A30PM+ET%29

3. https://thehackernews.com/2025/08/salesloft-oauth-breach-via-drift-ai.html

4. https://unit42.paloaltonetworks.com/threat-brief-compromised-salesforce-instances/

5. https://developer.salesforce.com/docs/atlas.en-us.api_rest.meta/api_rest/resources_query.htm

7. https://developer.salesforce.com/docs/atlas.en-us.api_asynch.meta/api_asynch/get_job_info.htm

8. https://developer.salesforce.com/docs/atlas.en-us.api_asynch.meta/api_asynch/query_create_job.htm

.avif)

![Geographical distribution of organization’s affected by Akira ransomware in 2025 [9].](https://cdn.prod.website-files.com/626ff4d25aca2edf4325ff97/68e684a90c107be86fa5c2e0_Screenshot%202025-10-08%20at%207.53.20%E2%80%AFAM.png)

![Flowchart of Kerberos PKINIT pre-authentication and U2U authentication [12].](https://cdn.prod.website-files.com/626ff4d25aca2edf4325ff97/68e53a2b5e9394d5a5cbebb7_Screenshot%202025-10-07%20at%209.04.43%E2%80%AFAM.png)

![Cyber AI Analyst investigation into the suspicious file download and suspected C2 activity between the ESXI device and the external endpoint 137.184.243[.]69.](https://cdn.prod.website-files.com/626ff4d25aca2edf4325ff97/68e53a8ea01e348d5a62a6c3_Screenshot%202025-10-07%20at%209.06.31%E2%80%AFAM.png)

![Packet capture (PCAP) of connections between the ESXi device and 137.184.243[.]69.](https://cdn.prod.website-files.com/626ff4d25aca2edf4325ff97/68e53ae5304f84a4192967db_Screenshot%202025-10-07%20at%209.07.53%E2%80%AFAM.png)

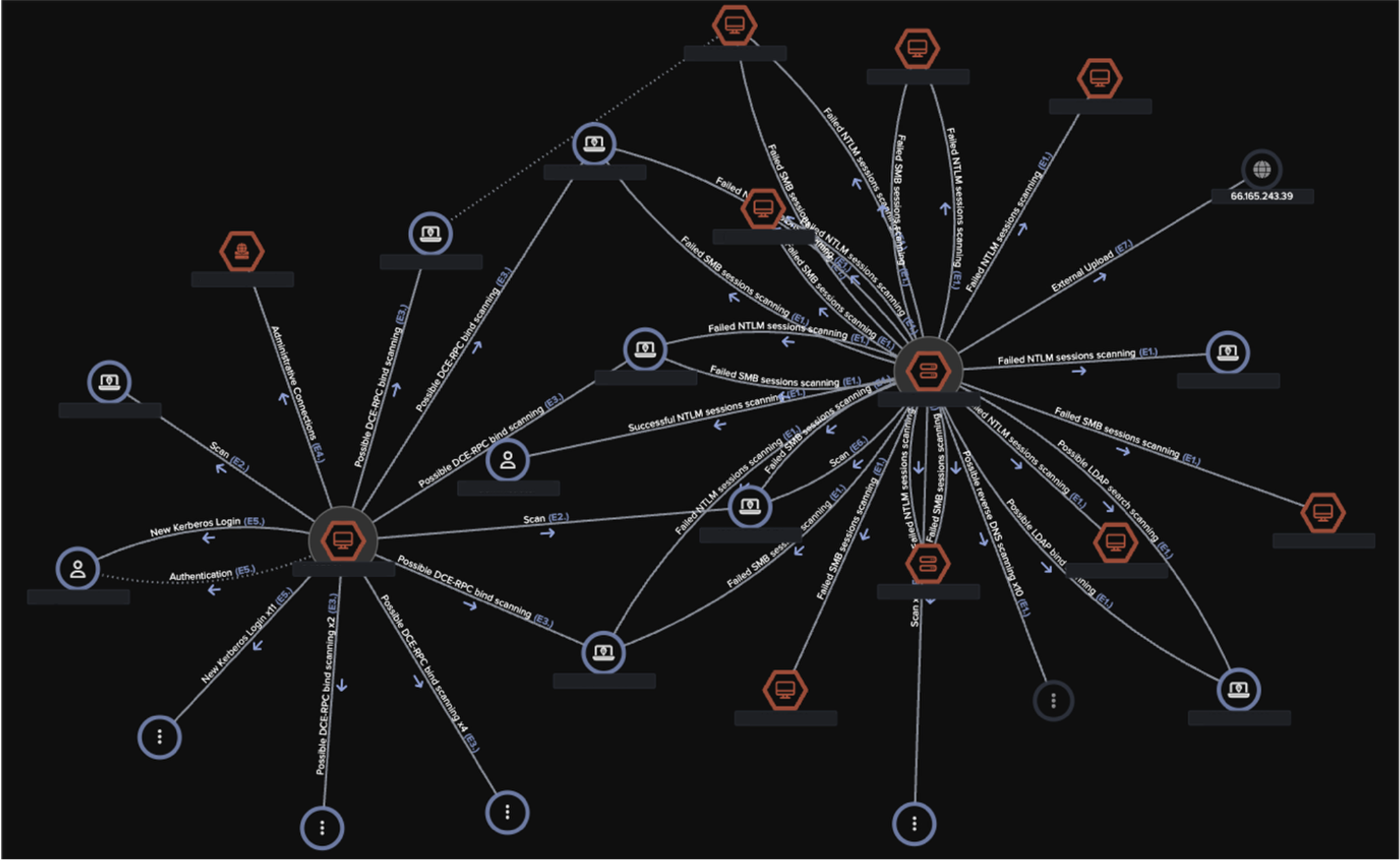

![Cyber AI Analyst incident view highlighting multiple unusual events across several devices on August 20. Notably, it includes the “Unusual External Data Transfer” event, which corresponds to the anomalous 2 GB data upload to the known Akira-associated endpoint 66.165.243[.]39.](https://cdn.prod.website-files.com/626ff4d25aca2edf4325ff97/68e53b26d90343a0a6ab3bb2_Screenshot%202025-10-07%20at%209.09.02%E2%80%AFAM.png)