In recent years, cyber-criminals have increasingly directed their efforts toward sophisticated, long-haul attacks against major companies — a tactic known as “big game hunting.” Unlike standardized phishing campaigns that aim to deliver malware en masse, big game hunting involves exploiting the particular vulnerabilities of a single, high-value target. Catching such attacks requires AI-powered tools that learn what’s normal for each unique user and device, thereby shining a light on the subtle signs of unusual activity that they introduce.

In the threat detailed below, cyber-criminals targeted a major firm with Ryuk ransomware, which Darktrace observed during a trial deployment period. Leveraged very often in the final stage of such tailored attacks, Ryuk encrypts only crucial assets in each targeted environment that the attackers have handpicked. Here’s how this particular incident unfolded, as well as how AI Autonomous Response technology, if in active mode, would have contained the threat in seconds:

Incident overview

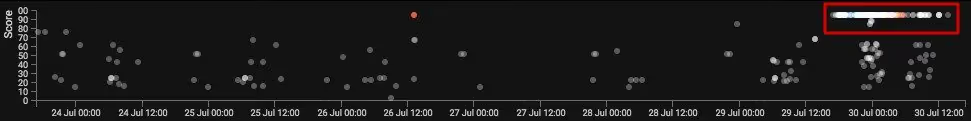

Rooted in its evolving understanding of ‘self’ for the targeted firm, Darktrace AI flagged myriad instances of anomalous behavior over the course of the incident — each represented by a dot in the visualization above. The anomalous activity is organized vertically according to how unusual each behavior was in comparison to “normal” for the users and devices involved. The colored dots represent particularly high-confidence detections, which should have prompted immediate investigation by the security team.

Compromised admin keys

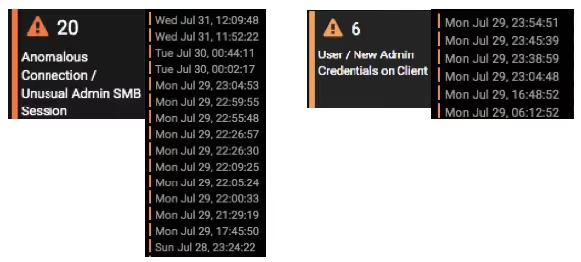

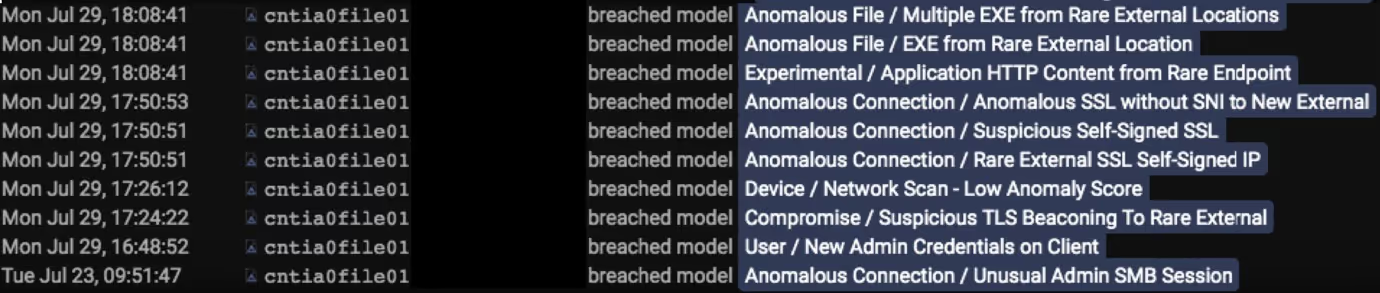

The first sign of attack was the highly unusual use of an administrator account not previously seen on the network, suggesting that the attackers had gained access to the account outside the limited scope of the Darktrace trial before moving laterally to the monitored environments. Had Darktrace been deployed across the digital infrastructure, the initial hijacking of the account would have been obvious right away. Nevertheless, Darktrace alerted on the anomalous admin session repeatedly and in real time, as shown below:

This behavior is typical of big game hunting. Rather than firing their payload straight away upon accessing the network, the attackers engaged in a longer-term compromise to attain the best position for a crippling attack.

Infiltration via TrickBot

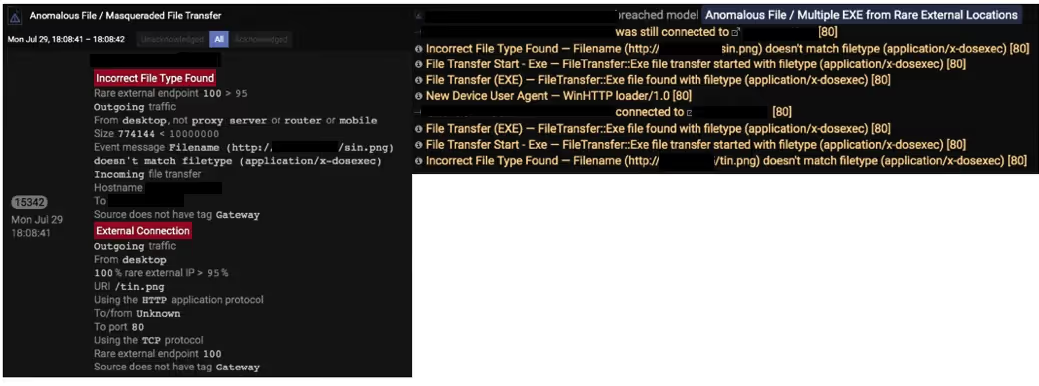

Darktrace then detected the infamous TrickBot banking trojan being downloaded onto the network. While the attacker already had access via the compromised admin credentials, Trickbot was used as a loader for further malicious files and as an additional command & control (C&C) channel. Among the most common post-exploitation steps were:

Command & Control communication

Once the Trickbot infection had begun, Darktrace observed C&C communication back to the attackers. And whereas many devices exhibited anomalous behavior, Darktrace pinpointed one such device at the nexus of the infection. The below image illustrates the plethora of suspicious connections detected on this single device:

Using TLS Fingerprinting — also called JA3, the subject of a previous blog post — Darktrace detected a new piece of software making encrypted connections from this device to multiple unusual destinations, a behavior known as beaconing.

Ransomware encryption commences

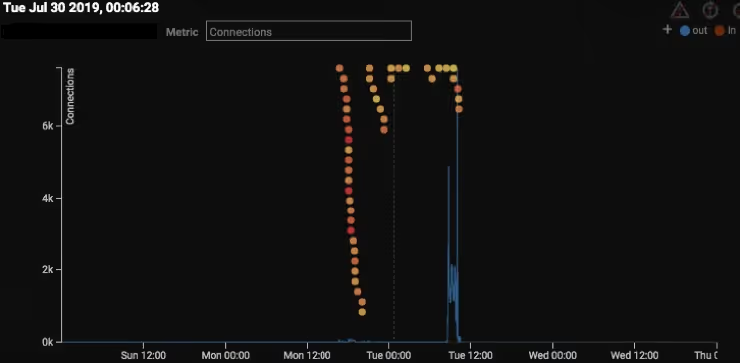

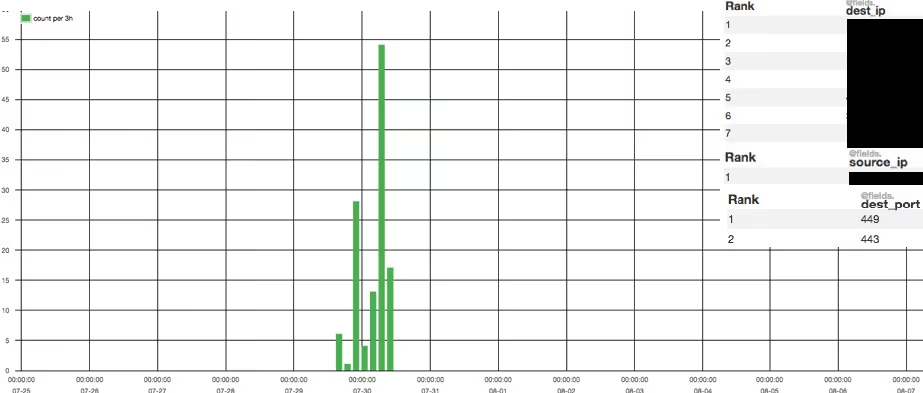

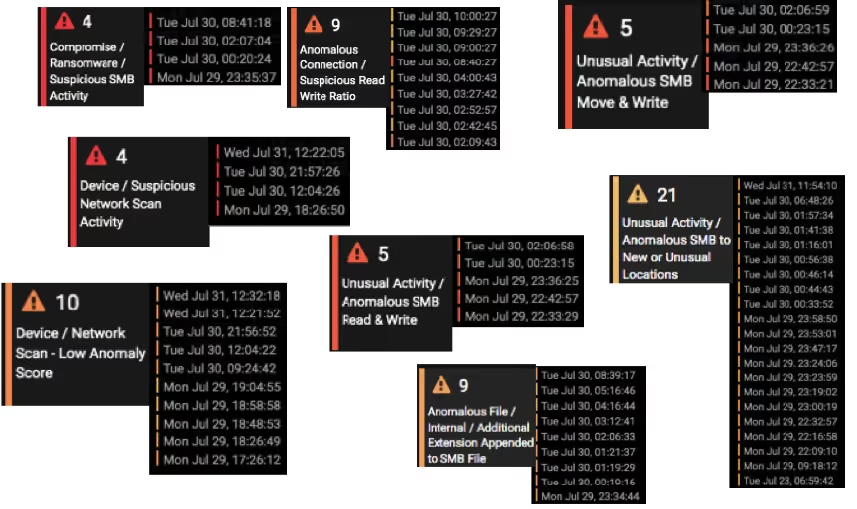

Following the establishment of the connection with the C&C infrastructure, the Ryuk ransomware was finally deployed. During this “noisy” period with many suspicious SMB activities, Darktrace even more clearly indicated the seriousness and extent of the attack:

In just 12 hours, Ryuk had encrypted more than 200,000 files. The entire incident took place over 36 hours — after that, the company shut down its network to prevent further damage.

Ransomware retrospective

Following the incident, the business traced the initial compromise back to a part of their network in another country that Darktrace did not have visibility over during this trial period. The infection spread until it reached a recently installed file server that Darktrace was, in fact, monitoring. The attacker likely got access to an administrative account that had been used to build this server and, at that point, they had the access needed to fire the Ryuk ransomware.

This incident put Darktrace in the unique position of observing a ransomware attack wherein none of the alerts were seen or actioned by the internal IT team, demonstrating what such an attack can do absent any intervention and response. Had the company actively monitored its Darktrace deployment, the security team would have received and actioned the alerts in real time, as its thousands of users do on a daily basis.

Autonomous Response to the rescue

Had the firm deployed Autonomous Response technology, the lack of attention afforded to Darktrace’s alerts would not have mattered. Whereas four hours passed from the executable download to the first encrypted file, Autonomous Response would have neutralized the threat within seconds, preventing widespread damage and giving the security team the crucial time to catch up.

The screenshot below shows an excerpt of Darktrace’s detections at the beginning of the file server compromise. The detections are listed in chronological order from bottom to top, along with the action that Darktrace’s AI Autonomous Response tool, Antigena, would have taken:

In sum, Antigena would have taken appropriate action by enforcing normal behavior, rather than applying a binary block (e.g. completely quarantining the device) as legacy tools would.

To learn how Antigena neutralizes threats without interrupting normal business operations, check out our in-depth white paper: The Evolution of Autonomous Response: Fighting Back in a New Era of Cyber-Threat.